Robotics for manufacturing

ME researchers are exploring how robotics and AI can help improve manufacturing workers’ safety, standardize processes and more.

By: Lyra Fontaine

Photo: Dennis Wise / University of Washington

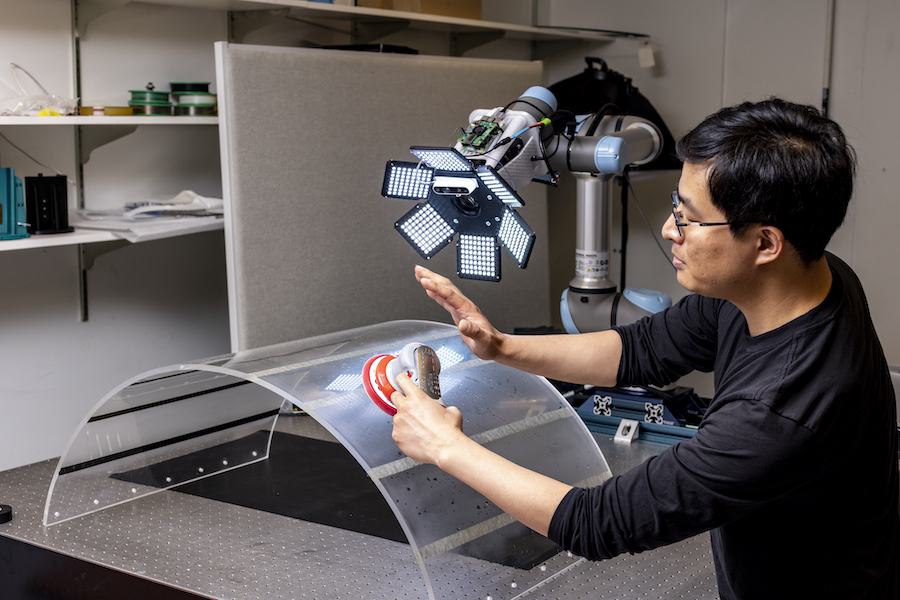

Top image: ME researchers are using a robot with a 2D stereo camera and a parallel gripper (shown above) that has pressure sensors. They developed an algorithm that can detect when objects are slipping out of the grasper.

Working alongside industry partners, ME researchers are exploring ways to improve manufacturing workers’ safety, automate inspections and enhance robots’ abilities to interact with objects around them.

In the UW Mechatronics, Automation and Control Systems Laboratory (MACS Lab), researchers study how machines and automation processes can positively impact people’s lives. The lab is led by Xu Chen, Bryan T. McMinn Endowed Research Professor in Mechanical Engineering.

“Artificial intelligence is creating significant new opportunities,” Chen says. “Enabling robots to intelligently manipulate objects could assist workers in completing manufacturing tasks. I am really excited to solve challenges in this space.”

In the Boeing Advanced Research Center, ME Assistant Professor Krithika Manohar develops algorithms to predict and control complex dynamical systems, which are unpredictable situations where conditions evolve over time. Her work includes optimizing sensors for decision-making in aircraft manufacturing.

“AI and machine learning are very powerful in this space because engineering processes are strictly regulated,” she says. “Your flight tests have to be extremely accurate. An airplane wing needs to be within a strict range of measurements in order to work properly. AI models, when applied to these very well-controlled processes, can learn the patterns much easier. They can find the variables that affect the defects of these parts.”

Manohar enjoys how her work is applied to real-life manufacturing processes, such as predicting shim gaps in aircraft.

“I can see it being applied and how it affects these real engineering decisions,” she says. “You can see it in an actual wing.”

A robotic grasper

How do you teach robots to grip objects and detect when the objects are slipping? A new project in the MACS Lab combines visual and tactile feedback in industrial robots that perform tasks alongside human workers. Previous studies have proposed only visual or only tactile feedback algorithms to grasp objects. This project, funded by the UW + Amazon Science Hub, mimics how humans use both vision and touch to grip objects.

The researchers – including ME Ph.D. student Xiaohai Hu and master’s students Apra Venkatesh and Guiliang Zheng – are using a robot with a 2D stereo camera and a parallel gripper that has pressure sensors in their experiments. They developed an algorithm that detects when an object slips from the robotic grasper more than 99% of the time.

The team tested their approach with 10 common objects, including a sponge, box, tennis ball and screwdriver. They also demonstrated grasping a book out of a shelf of objects.

“This process seems intuitive but is actually quite dynamic and difficult for robotic graspers,” Chen says. “Robotic grasping is a complex task that involves challenging perception issues, planning and executing precise interactions, and utilizing advanced reasoning. In our demos, the friction also changes during the process.”

Detecting and preventing objects from slipping out of the robotic grasper could be useful abilities for robots that work alongside workers in environments such as warehouses or manufacturing facilities. For example, a grasper with slip detection capabilities could hold and move heavy objects such as machinery parts or automotive components, pick up fragile items without damaging them, sort items such as packages and handle wet or slippery items like produce.

Now that they have a better understanding of grasping and can detect when objects slip, the team hopes to increase the force of the gripper and change the location of where it grasps the object to prevent the object from falling.

Related stories

MACS LAB

A vision for robotics

The UW MACS lab builds robots that play games, perform tasks and advance research.

MACHINE LEARNING

Putting the 'AI' in airplanes

Machine learning presents tremendous opportunities to improve how we build airplanes. ME Assistant Professor Krithika Manohar is just getting started.

Improving workers' safety

In 2021, 334,500 U.S. workers recorded a nonfatal workplace injury in the manufacturing industry, according to the U.S. Bureau of Labor Statistics. Workers can experience injuries repeatedly performing manual tasks involved in aerospace manufacturing, such as composite hand layup, in which layers of composite materials are applied and smoothed by hand to conform to parts, and then cured.

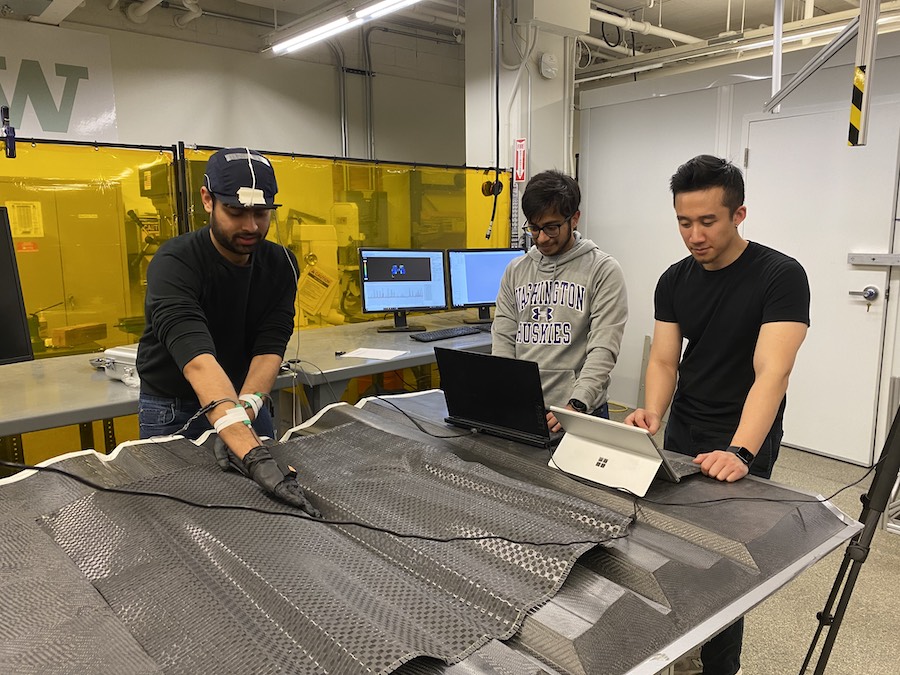

Manohar and Ashis Banerjee, associate professor of ME and of industrial and systems engineering, are working on quantifying the ergonomic risk of Boeing technicians who perform hand layups of composite materials. They’re doing this by collecting data from participating employees, who wear a head-mounted motion-tracking camera and pressure-sensing gloves as they work.

The researchers use the data from the camera and gloves to automatically assess a worker’s body pose and movements associated with injury. They then quantify levels of ergonomic risk using established industry-standard metrics. The project is jointly funded by The Boeing Company and Joint Center for Aerospace Technology Innovation (JCATI).

“While robots can do automated fiber placement, it’s difficult to duplicate the performance of a human worker,” Manohar says. “Currently, there is no way to quantifiably assess what is happening to the worker as they are doing these processes. We’re quantifying the risk to workers in a data-driven way, instead of having a subjective evaluation.”

This assessment could also be relevant to workers doing riveting installation or any other work that adds stress to the hands.

Automating visual inspection

Manufacturers sand aircraft parts to smooth them and identify defects before painting. The MACS Lab is part of a team with GKN Aerospace, GrayMatter Robotics and EWI that’s developing a way to robotically sand aircraft parts.

The MACS Lab is focused on one key part of the project: automating the visual inspection of sanded aircraft canopies and windshields. To make this easier, the researchers created a robot that inspects the materials after the sanding process. A high-resolution camera captures detailed images of the surface and detects defects, such as scratches and chips.

This technology could support workers who inspect the entire surface of aircraft parts to identify sanding mistakes, a challenging process to standardize.

“We automated the process to improve consistency,” says ME graduate student and MACS Lab researcher Colin Acton.

The MACS Lab has worked on the robotic inspection of parts for more than three years. Previously, they worked with GKN Aerospace on a project to automate the defect inspection of complex metallic parts. The technology they developed found more than 95% of defects. Currently, Chen and Manohar are working with GE on a project to robotically inspect composite aircraft engine parts.

Others involved in the projects include ME graduate students SangYoon Back, BT Lohitnavy and Thomas Chu and ME alum Hui Xiao, ’21.

Research was sponsored by the ARM (Advanced Robotics for Manufacturing) Institute through a grant from the Office of the Secretary of Defense and was accomplished under Agreement Number W911NF-17-3-0004. The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of the Office of the Secretary of Defense or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for Government purposes notwithstanding any copyright notation herein.