By Lyra Fontaine

ME Ph.D. candidate Ekta Samani’s research aims to improve visual perception in autonomous robots.

Using computational topology tools and human cognition, Ekta Samani trains her robot to recognize partially obscured objects in new environments.

Ph.D. candidate Ekta Samani envisions robots someday being able to navigate new environments to perform useful tasks. One example is sorting recyclable and non-recyclable materials at waste facilities, where the variety of items is constantly changing and can be unpredictable.

Robots typically navigate spaces that are designed for their operation, including warehouses and grocery stores where items are neatly organized. The robots are trained to identify items by repeatedly looking at different object placements. While this method works for structured areas, it fails in cluttered environments, such as recycling facilities or a messy closet. This is partly because it’s impossible to train a robot on every object placement it may encounter.

To solve this challenge, Samani developed a new method that emulates human object recognition in robots.

“Using computational topology tools, I develop methods for robots to operate in environments that are unknown to them, so they can operate in our homes or another new environment,” she says. “My methods are also inspired by human perception. I researched cognitive development in infants to see how they develop some of these skills."

Combining topology and cognitive psychology

Topology is an area of mathematics that studies the connectivity between different parts of objects. Samani uses computational topology-based methods to capture an object’s underlying shapes. This helps robots build representations of an object’s appearance, including its color.

Samani also replicated in her robot how humans reason about the hidden portions of partially obscured objects to recognize them, a process known in cognitive psychology as object unity. This enabled the robot in her recent studies to recognize objects, regardless of how they’re placed.

“Humans compute representations of every object they see,” she says. “These representations encode information such as shape and visual appearance. To recognize any object, they match its representation with those in their memory. I replicated that reasoning mechanism into my framework so the robot can use it to perceive objects in cluttered spaces.”

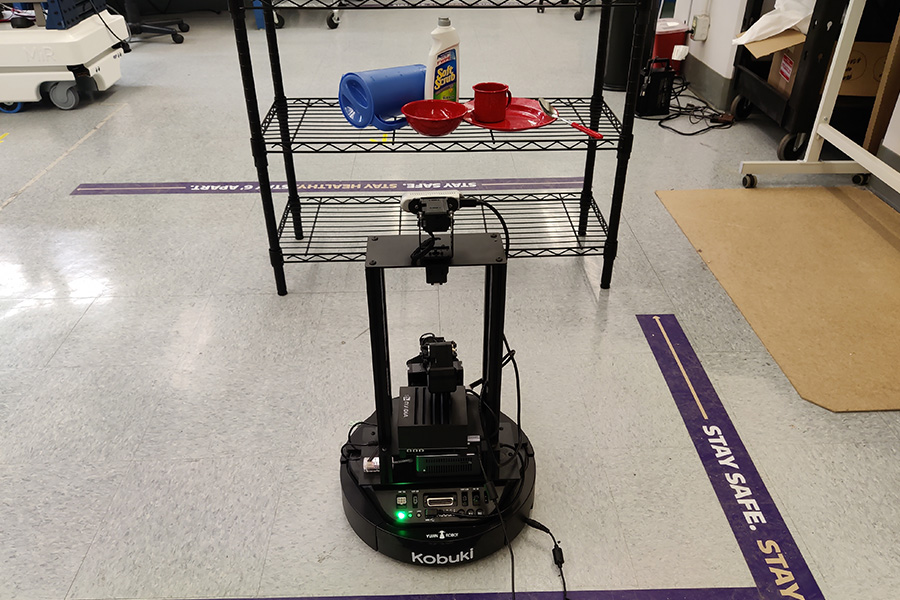

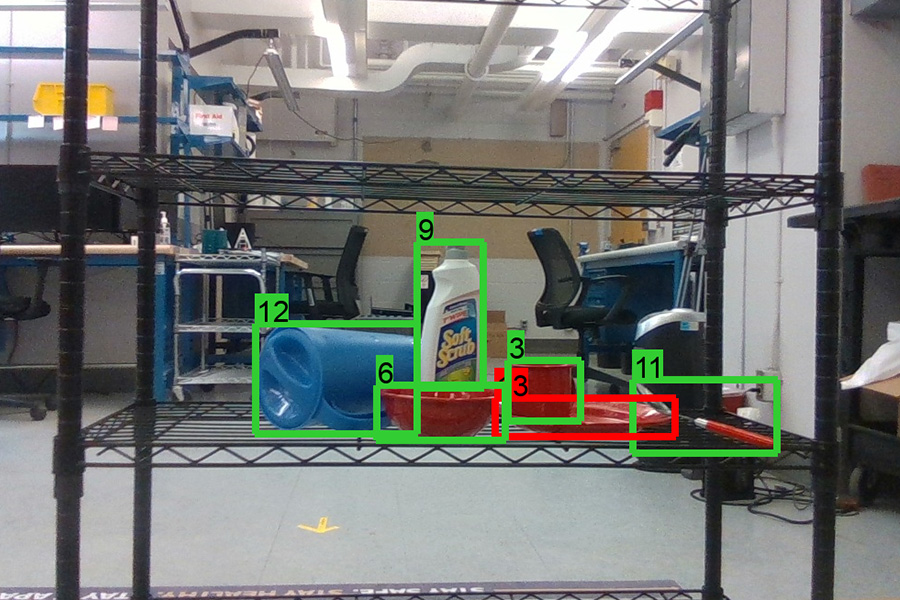

In recent experiments, Samani navigated a robot around her lab. Using her methods, the robot could recognize most of the household objects placed on a shelf, even if the items were partially obscured. The green rectangles represent objects that the robot recognized and the red rectangles are objects the robot had trouble recognizing.

In recent experiments, Samani showed a robot computer-generated pictures of all the objects it could potentially see in her research lab, which the robot stored as references. The lab, where common household objects such as dishes and cleaning supplies were placed on shelves, was a new environment for the robot. Using Samani’s methods, the robot could recognize objects as she navigated it around the room, even when the items were partially obscured. The robot could match partial objects it saw with pictures of the complete objects.

Samani hopes her work is one step toward enabling robots to sort waste into recyclable and non-recyclable materials, a task that can be unsafe for people because it may involve sharp objects or exposure to hazardous materials. When Samani recently tested her method in her lab, the robot successfully recognized the objects in the trash can.

“You cannot predict the contents of a trash or recycling bin,” she says. “We need methods that enable robots to operate in environments not specifically designed for autonomous systems.”

Samani recently shared her research about helping robots navigate unstructured spaces with the global robotics community. She was one of 30 senior Ph.D. students and early-career researchers across the globe accepted to this year’s Robotics: Science and Systems Pioneers intensive workshop. In July, Samani visited South Korea to attend the workshop, meet other robotics researchers, share her work and learn from senior researchers in the field. Earlier this year, she shared her studies in the 2023 UW Three Minute Thesis competition and received the second-place prize.

Making life easier for people

Ekta Samani and ME Associate Professor Ashis Banerjee

Samani first connected with her mentor Ashis Banerjee, associate professor of ME and of industrial systems and engineering, during her undergraduate education at the Indian Institute of Technology, Gandhinagar.

“The distinguishing aspect of Ekta’s research is her ability to synthesize concepts and techniques from different disciplines – namely machine learning, computational topology and human cognition – to come up with novel and practical solutions to challenging autonomous robot perception problems,” Banerjee says.

Banerjee’s research on improving robotic perception resonated with Samani, who’s also interested in designing autonomous systems that make life easier for people.

“I think robots are meant to help us, not replace us,” she says.

Samani has continued to work with Banerjee while at the UW. She received her ME master’s degree in 2021 and hopes to complete her Ph.D. this summer.

After graduating, she will join a cohort of researchers in Amazon’s Postdoctoral Science Program that will explore solutions to teams’ challenges. She plans to continue expanding her knowledge of topology and applying it to a broad range of automation problems.

“I’m looking forward to working on some challenging problems at Amazon,” Samani says. “The solutions have to be deployed at a huge scale because they work with so many customers, products and teams. I’m excited to gain industry experience so I can become a more polished researcher.”

In her future career, Samani hopes to continue researching robotics and automation with one goal in mind: “I want to make a positive impact on people’s lives,” she says.

Originally published July 31, 2023